One of the most perplexing dilemmas of the postmodern society—a society that, having moved beyond the industrial age, is now rapidly and often anxiously constructing the foundations of an informational world and a data-driven human for the coming century—is that, paradoxically or perhaps precisely because of the ever-growing volume, scope, and complexity of information, individuals find themselves less and less able to grasp large-scale systems. Scientific disciplines are becoming increasingly specialized. Although new fields in science and philosophy continue to emerge, the complexity and endless fragmentation of knowledge into ever-smaller components prevents even the most advanced scholars from achieving a comprehensive perspective or making universal assessments. The information age is, above all, the age of the collapse of determinations and certainties.

In the modern world, the ancient logic of binary oppositions—between chaos and order, darkness and light—has given way to a logic of complexity. The rigid “either/or” has been replaced by “both/and” or even “neither/nor.” In this new logic, ambiguity, approximation, contingency, and probability replace rigidity, precision, certainty, law, and assurance. The various parameters that constitute “external reality” have, due to the astronomical growth in information and the dizzying escalation in the number of “knows,” become so diverse, interwoven, and recursive that they cast doubt on even the most basic scientific, logical, or behavioral judgments.

The birth of modernity gave rise to the idea—perhaps even the illusion—that the human being is the center of the universe, a center through which all phenomena must be defined. Modern intellectuals emerged from this notion and, for over two centuries, sought to replace ancient beliefs with ideologies that, in essence, followed the same structural patterns: grounded in oppositions between backwardness and progress, ignorance and knowledge, ambiguity and clarity—and the supposed necessity (even historical inevitability) of transitioning from the former to the latter. This logic persisted fundamentally until recent decades. Even the advent of the information revolution did not fully dismantle it, as the binary structure of computers, while deeply transformative, still reflects the most basic form of the traditional human logic of dualities.

The once steadfast and seemingly unshakable convictions of intellectuals have collapsed—not only in the realm of fragile social and political beliefs (as argued by thinkers like Popper and Morin), but also within the domain of scientific laws and principles. Eternal truths and geometrical or mathematical axioms, long seen as the most secure bastions of certainty, were called into question by developments in non-Euclidean geometry and modern mathematics—often by the scientists themselves (Gödel, Heisenberg, Church, among others).

In physics and meteorology, beginning in the 1960s, the recognition of how minute phenomena could produce massive effects through long causal chains undermined the belief that uncertainty was confined only to the observation of the infinitely small. The hope that increasingly powerful computational tools (with greater memory and speed) could at least deliver certainty for the infinitely large also collapsed. As a result, science increasingly came to rely on probability rather than determinism, with fields like statistics—built on the logic of likelihood rather than certainty—gaining widespread success and prominence.

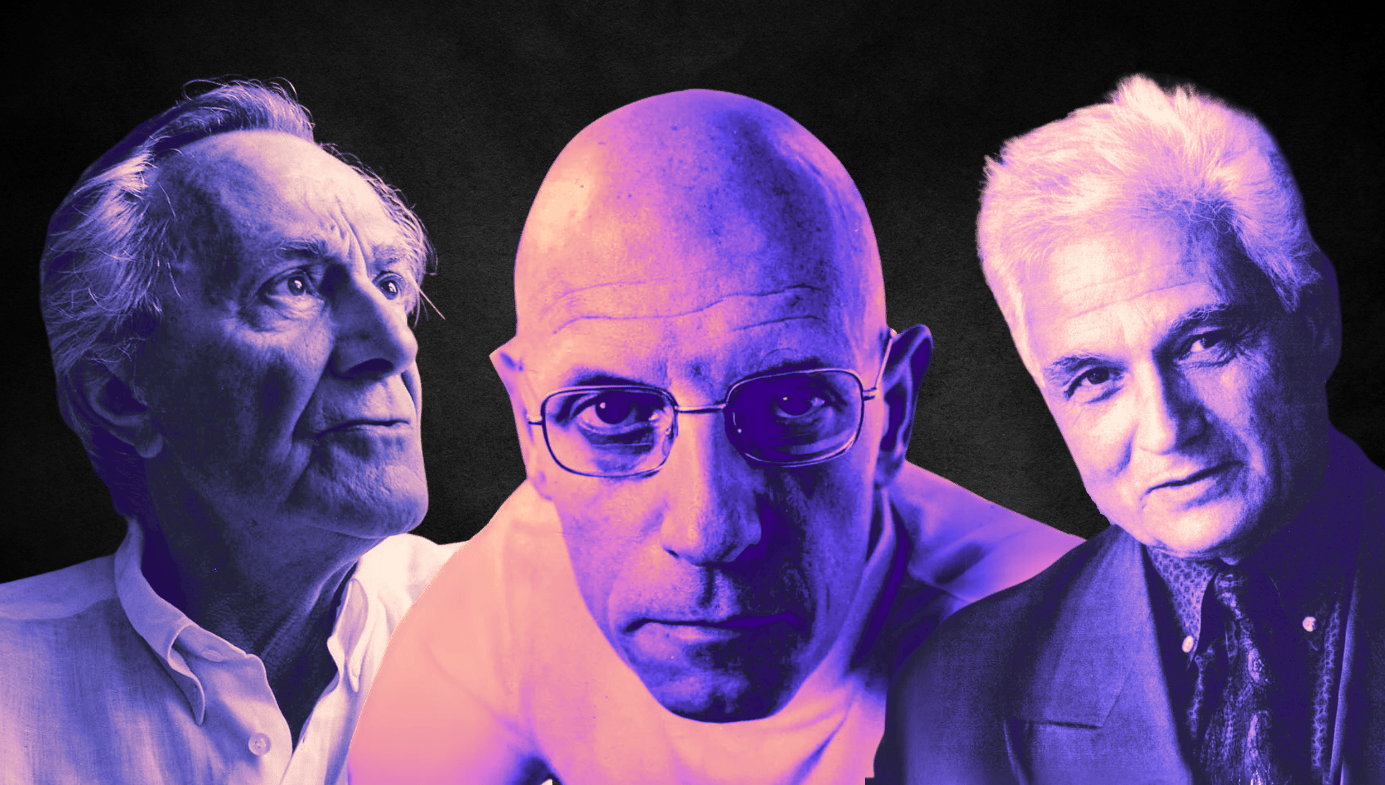

In the midst of these transformations, the concept of intellectualism underwent a profound evolution in the modern age. While in ancient Greece, intellectual life was rooted in the fusion of science and philosophy, and during the Middle Ages it was shaped by religious thought and reformist ideas, and later during the revolutionary era by the optimistic humanism of the Enlightenment, the twentieth century radically altered the role of the intellectual.

Scientific thought advanced rapidly and became so highly specialized and fragmented that it could no longer maintain a connection or solidarity with broader social thinking. As a result, the idea of the intellectual gradually became confined to the realm of social critique. Naturally, this confinement shifted the intellectual’s primary role toward confronting political realities and challenging anti-utopian conditions through critical engagement with the sociopolitical sphere.

The notion of “intellectual commitment” emerged from this very confrontation—reaching its peak in the context of anti-colonial and independence struggles, as well as in economic and anti-capitalist movements. However, the rapid evolution of science, marked by accelerated technological development and the collapse of former concepts and beliefs, soon reshaped the very foundations of modern society. It introduced such a degree of uniformity and global standardization across political, economic, and even behavioral realms that the space for critical thinking progressively diminished. In developing countries, the repressive pressure of authoritarian regimes, and in the developed world, the constraining forces of market logic, both served to marginalize critical perspectives. Consequently, intellectual critique was often forced to retreat behind the facade of technocracy, expressing its ideas through the seemingly neutral and dispassionate language of expert analysis.

Postmodern society deprives individuals of the capacity for deeply philosophical analysis, transferring that function to institutions. The key to resolving the fundamental dilemma of this society lies in addressing the contradiction between, on the one hand, the flourishing of thought and individual freedom brought about by social development, and on the other, the narrowing scope of comprehension and analytical capacity due to the overwhelming growth of knowledge.

This text is an AI-assisted translation of a note, the original of which can be found at the following link: